Storage Stack

This section explores the transformative impact of Ceph on Labo-CBZ's infrastructure. As a distributed storage solution, Ceph is fundamental to the storage strategy at Labo-CBZ, providing a scalable, resilient, and high-performance foundation. We will delve into how Ceph's seamless integration elevates Labo-CBZ's storage capabilities, underscoring its pivotal role in the organization's ecosystem.

Enhancing Storage with Ceph

Ceph is not just a distributed storage system; it's the backbone of Labo-CBZ's storage architecture, offering unparalleled scalability, resilience, and performance. This section delves into how Labo-CBZ leverages Ceph's capabilities to meet its evolving storage needs.

Key Benefits of Ceph in Labo-CBZ

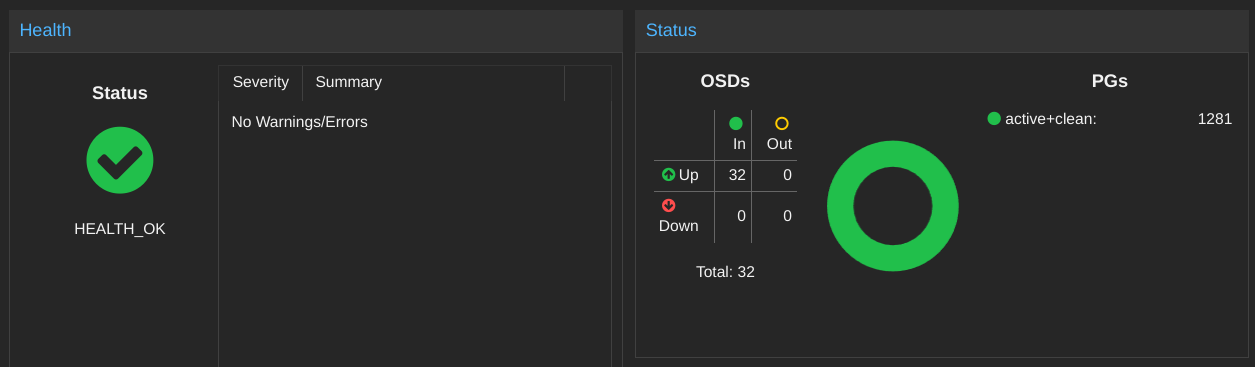

Ceph's integration into Labo-CBZ's Proxmox cluster has been transformative, ensuring data integrity and system performance even as demands scale. A cornerstone of this integration is the strategic use of Ceph Object Storage Daemon (OSD) pools. These pools are more than just storage compartments; they're intelligently designed to optimize data distribution and accessibility across the network.

Tailoring OSD Pools for Optimal Performance

This section will explore how these settings are adjusted to meet different data requirements, providing a blueprint for effective data management.

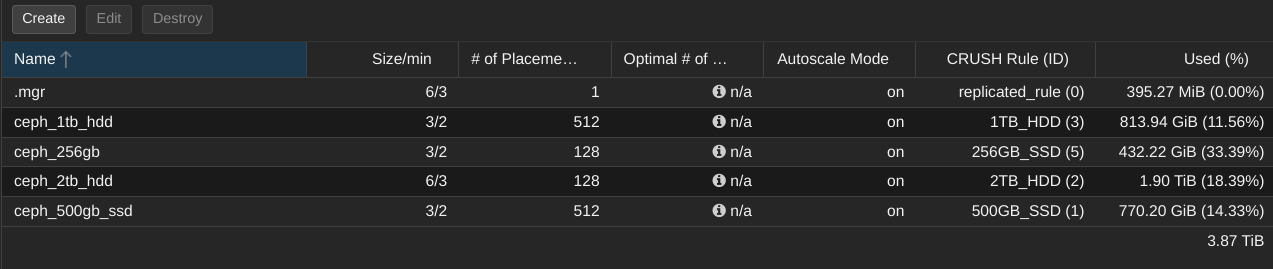

Within Labo-CBZ's infrastructure, five distinct Ceph OSD pools are meticulously configured, each serving as a dedicated storage environment for VMs and LXCs:

ceph_1tb_hdd: Tailored for high-capacity storage needs without the necessity for peak performance. Ideal for databases, monitoring systems, and logs, this pool operates with a size configuration of 3/2, meaning data is stored in three copies with two validation copies available for data usability, across 12 OSDs.

ceph_500gb_ssd: This pool is fine-tuned for speed and high availability, making it perfect for VM and LXC operating systems, along with temporary storage needs. It employs a size configuration of 3/2, ensuring data is stored in three copies with two validation copies available for data usability, across 12 OSDs, ensuring critical workloads are redundantly secured and readily accessible.

ceph_256gb_ssd: Specifically designed for production environments such as Kubernetes clusters and CI/CD platforms (e.g., Jenkins, GitLab), focusing on high performance and reliability. With 6 OSDs and a size ratio of 3/2, it guarantees data is stored in three copies with two validation copies available for data usability, ensuring redundancy and availability.

ceph_2tb_hdd: Allocated for the most sensitive data requiring unparalleled security and reliability, including daily backups, Nexus artifacts, and cloud storage. This pool adopts a more stringent size configuration of 3/2, meaning data is stored in three copies with two validation copies available for data usability, across 6 OSDs, providing extensive storage capacity and redundancy for critical data.

Each pool is strategically configured to align with the specific demands for performance, availability, and data protection of the applications and services it supports within the Proxmox cluster. Utilizing a mix of SSDs and HDDs and fine-tuning replication settings, the Ceph OSD pools offer a versatile and scalable storage solution, adeptly catering to the complex requirements of contemporary datacenters.

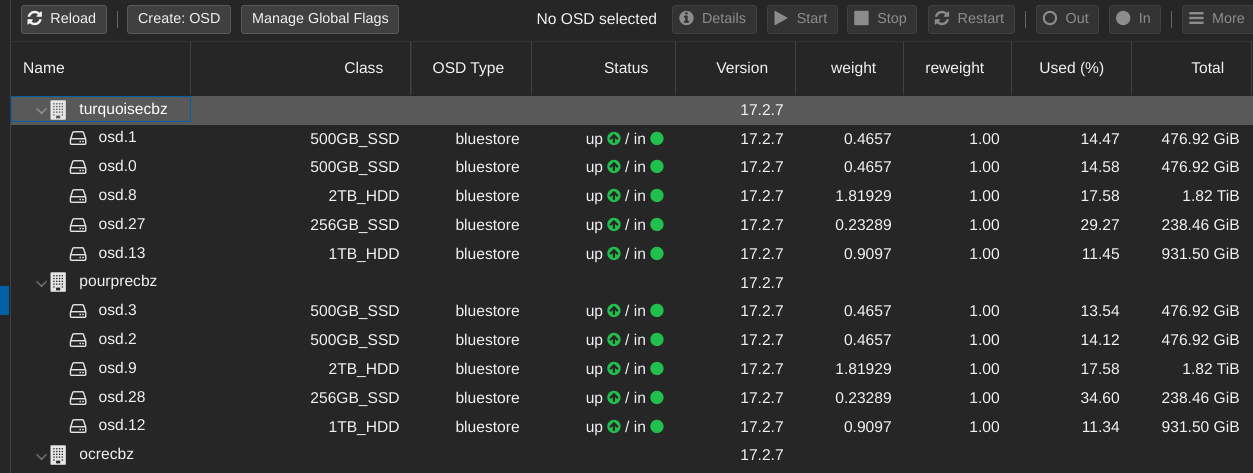

OSD Distribution

In our Proxmox cluster with Ceph integration, the organization of OSDs across nodes ensures optimal performance, redundancy, and data resilience by distributing different types of OSDs across the cluster nodes. Here's an example showcasing how each type of OSD is hosted on a separate node, facilitating data replication across the entire cluster:

The strategy of distributing OSDs across various nodes fine-tunes resource allocation, reduces the risk of performance bottlenecks, and strengthens data security throughout the Proxmox cluster. This deliberate arrangement of OSDs, tailored to their unique traits and the demands of their workloads, ensures the cluster delivers an optimal mix of speed, storage capacity, and robustness. This approach adeptly supports the varied requirements characteristic of contemporary datacenter operations.